Lingk Rhythm

Modern Enterprise iPaaS that Scales Data Integrations

Orchestrate real-time pipelines and transformations to unify your systems and data into one consistent flow — ensuring every integration runs in harmony.

Trusted by Top Institutions

Platform Features

Lingk Rhythm goes beyond traditional integration platforms by creating a unified data fabric for seamless, scalable, and intelligent data integration for the agentic enterprise.

Hundreds of Pre-Built Data Connectors

Connect any system in any environment (cloud or on-prem). Lingk's pre-built connectors are designed to work seamlessly with top enterprise applications.

No-Code & Low-Code SQL-Based Data Transformations

Build and scale data flows using intuitive visual tools or write SQL for full control.

Big Data Processing Engine

The Lingk Platform is built on the industry's most powerful serverless data processing engine—Apache Spark. Allowing you to process billions of data rows instantly through large data pipelines.

Automated Workflows & Orchestration

Easily schedule, trigger, and monitor data pipelines across their entire lifecycle. With built-in conditional logic, event-based triggers, intelligent duplicate prevention, retries, and real-time alerts.

Unified Data Model & Mapping Tools

Simplify complex data transformations between systems by aligning them to a common data structure, with tools for mapping, lineage tracking, and validation.

Data Agents

Lingk Orchestra takes on the role of an integration specialist, automating data mapping, generating ETL logic, and building recipes — turning weeks of work into seconds.

Unified Data Ecosystem

Integrate, Manage and Power Your Data for the Agentic Enterprise

Example use cases

Data Migration

Example: Migrating Data from SFTP to Salesforce

Migrating ERP Banking data on SFTP into Salesforce

- Pull banking records from SFTP

- Transform and cleanse records

- Pull data from Salesforce

- Join SFTP and Salesforce data

- Upsert records in Salesforce

Dynamic Data Pipelines

Example: Data Pipeline from Salesforce to Snowflake data warehouse

Moving Salesforce Lead Data to Snowflake

- Pull today's leads from Salesforce

- Write records to Snowflake staging tables (ELT)

- or Transform records to predefined tables (ETL)

- Log statistics

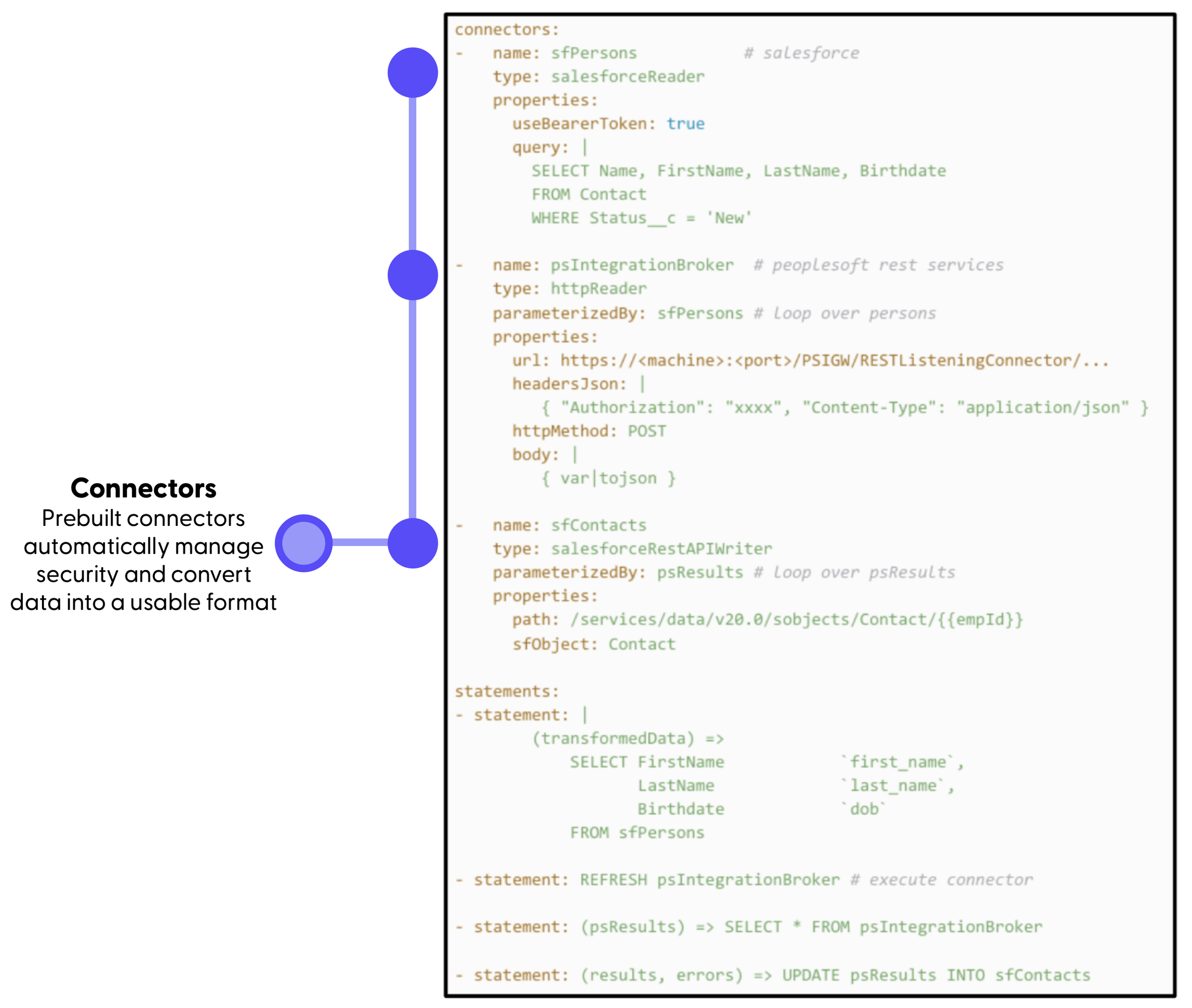

Data Integration

Example: Bidirectional data integration between Salesforce and PeopleSoft

Create a contact from Salesforce in PeopleSoft then pull the contact ID back into Salesforce

- Read contact application form status changes in Salesforce

- Write new contact application forms to PeopleSoft via APIs

- Update Salesforce with the contact application identifiers from PeopleSoft

How Lingk Recipes Work

Lingk recipes are sets of instructions that automate complex data integrations (ETL, ELT). Every recipe can be scheduled or called from other recipes or apps via the Lingk API.

-

Pre-built connectors for enterprise systems and end points. Used to accelerate building better integration recipes.

-

Utilize SQL for transformation and data operations. Other statement types help with executing tasks (moving SFTP files) and controlling data flows.

-

Schedule simple (daily at 9am) and advanced (M-F 9am-5pm) intervals using a web-based scheduler. Trigger recipes from events, webhooks and other recipes via the Lingk API.

Ready to scale your data integrations?

Lingk Rhythm unifies your data to support modern, AI-ready business process automation and business intelligence — powering workflow automation, better customer experiences and better data-driven decisions across departments.